GPU Essentials - A Concise Technical Guide

This is a concise GPU introduction I found helpful. With it, you can start CUDA programming and understand the basic terms you’ll encounter. These notes are adapted from this article, which was itself inspired by Jen-Hsun Huang’s keynote at Hot Chips 21 in 2009. Although the article was published in 2010—15 years ago—the GPU architecture concepts and terminology haven’t changed much. Modern GPUs include additional features for improved performance, but the fundamentals remain largely the same.

With the GPU’s rapid evolution from a configurable graphics processor to a programmable parallel processor, the ubiquitous GPU in every PC, laptop, desktop, and workstation is a many-core multi-threaded multiprocessor that excels at both graphics and computing applications.

GPU computing’s evolution

- Rendering high-definition graphics scenes is a problem with tremendous inherent parallelism. A graphics programmer writes a single-thread program that draws one pixel, and the GPU runs multiple instances of this thread in parallel—drawing multiple pixels in parallel.

- Also, GPU computing programs—written in C or C++ with the CUDA parallel computing model, or using a parallel computing API inspired by CUDA such as Direct- Compute or OpenCL — scale transparently over a wide range of parallelism. Software scalability, too, has enabled GPUs to rapidly increase their parallelism and performance with increasing transistor density.

- Evolving to modern GPUs involved adding programmability incrementally—from fixed function pipelines to microcoded processors, configurable processors, programmable processors, and scalable parallel processors.

- GPUs first used floating-point arithmetic to calculate 3D geometry and vertices, then applied it to pixel lighting and color values to handle high-dynamic-range scenes and to simplify programming.

- The GeForce 6800 scalable processor core architecture facilitated multiple GPU implementations with different numbers of processor cores.

- Early GPGPU computing programs achieved high performance, but were difficult to write because programmers had to express non-graphics computations with a graphics API such as OpenGL.

- The GeForce 8800 introduced in 2006 featured the first unified graphics and computing GPU architecture7,8 programmable in C with the CUDA parallel computing model, in addition to using DX10 and OpenGL.

- Its unified streaming processor cores executed vertex, geometry, and pixel shader threads for DX10 graphics programs, and also executed computing threads for CUDA C programs.

- Hardware multithread- ing enabled the GeForce 8800 to efficiently execute up to 12,288 threads concurrently in 128 processor cores.

- NVIDIA deployed the scalable architecture in a family of GeForce GPUs with different numbers of processor cores for each market segment.

- The GeForce 8800 was the first GPU to use scalar thread processors rather than vector processors, matching standard scalar languages like C, and eliminating the need to manage vector registers and program vector operations.

- (# Note: Scalar processors execute one operation per thread on a single data element, while vector processors execute the same operation on multiple data elements at once, requiring explicit vector instructions #)

- It added instructions to support C and other general-purpose languages, including integer arithmetic, IEEE 754 floating-point arithmetic, and load/store memory access instructions with byte addressing.

- It provided hardware and instructions to support parallel computation, communication, and synchronization—including thread arrays, shared memory, and fast barrier synchronization.

- (# Note: Fast barrier synchronization is a mechanism that quickly pauses threads in a block until all have reached the same point, ensuring they proceed together without race conditions #)

- NVIDIA introduced the third-generation Fermi GPU computing architecture in 2009.

- Fermi implemented IEEE 754-2008 and significantly increased double-precision performance. It added error-correcting code (ECC) memory protection for large-scale GPU computing, 64-bit unified addressing, cached memory hierarchy, and instructions for C, C++, Fortran, OpenCL, and DirectCompute.

- The GPU computing ecosystem is expanding rapidly, enabled by the deployment of more than 180 million CUDA-capable GPUs.

- NVIDIA developed the parallel Nsight GPU development environment, debugger, and analyzer integrated with Microsoft Visual Studio.

CUDA scalable parallel architecture

- CUDA is a hardware and software coprocessing architecture for parallel computing that enables NVIDIA GPUs to execute programs written with C, C++, Fortran, OpenCL, DirectCompute, and other languages.

- Because most languages were designed for one sequential thread, CUDA preserves this model and extends it with a minimalist set of abstractions for expressing parallelism. This lets the programmer focus on the important issues of parallelism—how to design efficient parallel algorithms—using a familiar language.

- By design, CUDA enables the development of highly scalable parallel programs that can run across tens of thousands of concurrent threads and hundreds of processor cores.

- A compiled CUDA program executes on any size GPU, automatically using more parallelism on GPUs with more processor cores and threads.

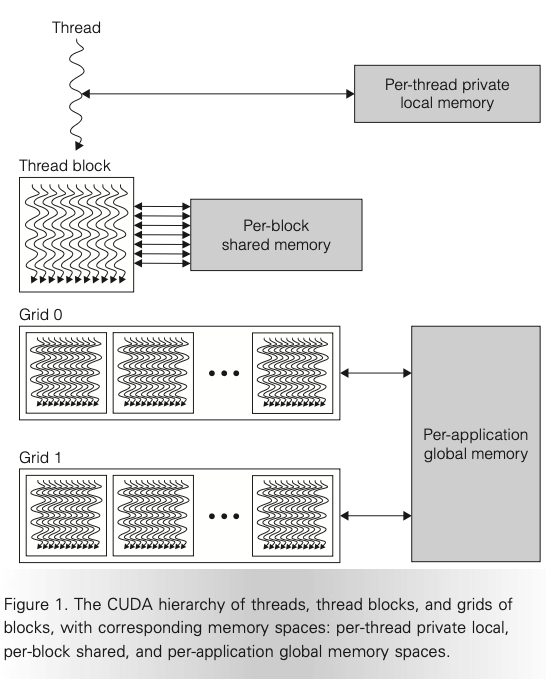

- A CUDA program is organized into a host program, consisting of one or more sequential threads running on a host CPU, and one or more parallel kernels suitable for execution on a parallel computing GPU. A kernel executes a sequential program on a set of lightweight parallel threads. As Figure 1 shows, the programmer or compiler organizes these threads into a grid of thread blocks. The threads comprising a thread block can synchronize with each other via barriers and communicate via a high-speed, per-block shared memory.

- Threads from different blocks in the same grid can coordinate via atomic operations in global memory space shared by all threads. Sequentially dependent kernel grids can synchronize via global barriers and coordinate via global shared memory.

- CUDA requires that thread blocks be independent, which provides scalability to GPUs with different numbers of processor cores and threads.

- Thread blocks implement coarse-grained scalable data parallelism, while the light-weight threads comprising each thread block provide fine-grained data parallelism. Thread blocks executing different kernels implement coarse-grained task parallelism. Threads executing different paths implement fine-grained thread-level parallelism.

- (# Note: Imagine a restaurant kitchen — multiple kitchens (thread blocks) each cook the same dish in parallel = coarse-grained data parallelism; within one kitchen, many chefs (threads) chop ingredients simultaneously = fine-grained data parallelism; if different kitchens prepare entirely different dishes = coarse-grained task parallelism; if chefs in the same kitchen follow slightly different recipes = fine-grained thread-level parallelism #)

- (# Note: Think of a university — each class (thread block) works on the same assignment = coarse-grained data parallelism; within a class, each student (thread) solves a small part of the assignment = fine-grained data parallelism; if different classes work on different subjects = coarse-grained task parallelism; if students in the same class take different approaches to solving a problem = fine-grained thread-level parallelism #)

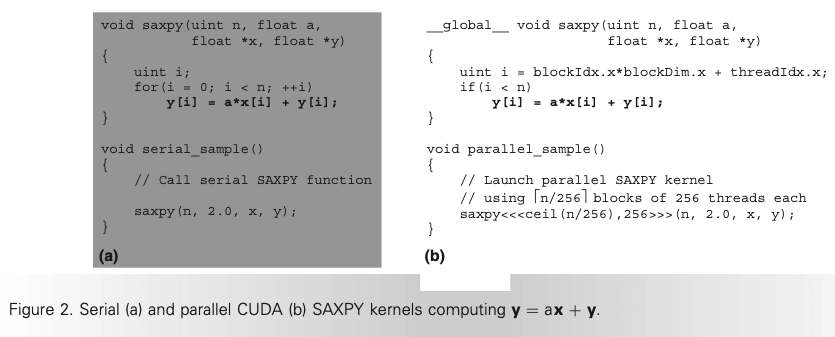

- Figure 2 shows some basic features of parallel programming with CUDA. It contains sequential and parallel implementations of the SAXPY routine defined by the basic linear algebra subroutines (BLAS) library.

- The serial implementation is a simple loop that computes one element of y per iteration. The parallel kernel executes each of these independent iterations in parallel, assigning a separate thread to compute each element of y.

- The __ global __ modifier indicates that the procedure is a kernel entry point, and the extended function-call syntax saxpy«<B, T»>(. . .) launches the kernel saxpy() in parallel across B blocks of T threads each.

- Each thread determines which element it should process from its integer thread block index blockIdx.x, its thread index within its block threadIdx.x, and the total number of threads per block blockDim.x.

- This example demonstrates a common parallelization pattern, where we can transform a serial loop with independent iterations to execute in parallel across many threads.

- In the CUDA paradigm, the programmer writes a scalar program—the parallel saxpy() kernel—that specifies the behavior of a single thread of the kernel. This lets CUDA leverage standard C language with only a few small additions, such as built-in thread and block index variables.

GPU computing architecture

- To address different market segments, GPU architectures scale the number of processor cores and memories to implement different products for each segment while using the same scalable architecture and software.

- NVIDIA’s scalable GPU computing architecture varies the number of streaming multi-processors to scale computing performance, and varies the number of DRAM memories to scale memory bandwidth and capacity.

- Each multithreaded streaming multiprocessor provides sufficient threads, processor cores, and shared memory to execute one or more CUDA thread blocks. The parallel processor cores within a streaming multi-processor execute instructions for parallel threads.

- (# Note: Picture a library — the building is a streaming multiprocessor (SM), the reading tables inside are processor cores, and the shared bookshelf is shared memory. A group of students (a thread block) comes in; they can sit across tables, use the shared books, and study in parallel. Multiple groups can use the same library if resources allow #)

- Multiple streaming multiprocessors provide coarse-grained scalable data and task parallelism to execute multiple coarse-grained thread blocks (possibly running different kernels) in parallel.

- (# Note: Imagine a city with many libraries (multiple SMs). Each library can host different study groups (thread blocks). Some groups may study the same subject = data parallelism, while others study different subjects = task parallelism. Because there are many libraries, multiple groups can work in parallel at a larger scale #)

- Multithreading and parallel-pipelined processor cores within each streaming multiprocessor implement fine-grained data and thread-level parallelism to execute hundreds of fine-grained threads in parallel.

- (# Note: Think of an assembly line in a factory — each worker (core) handles a specific step, and many items (threads) move through simultaneously. Because there are many workers and multiple lines, hundreds of small tasks get done in parallel with no idle time = fine-grained parallelism #)

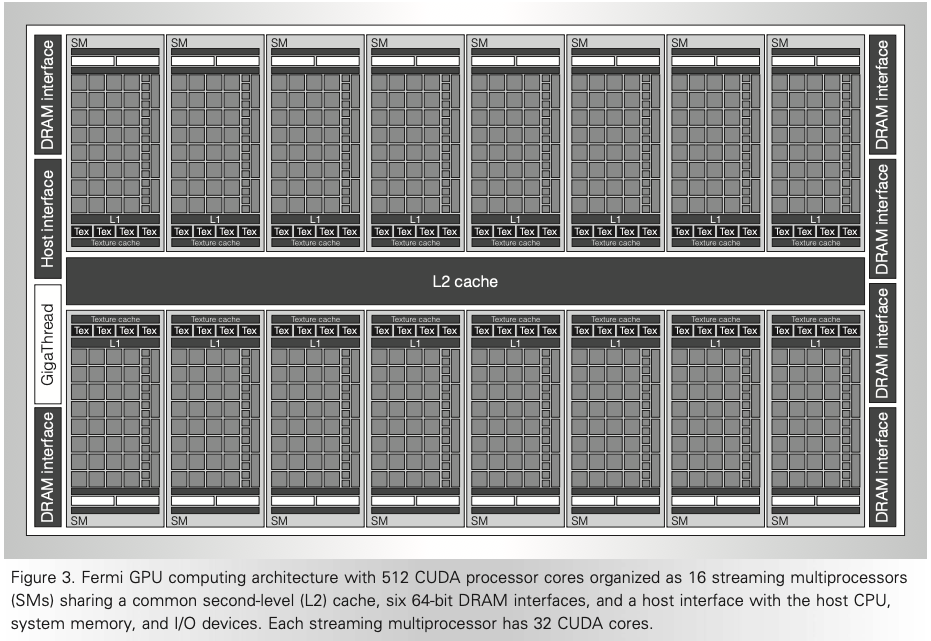

- To illustrate GPU computing architecture, Figure 3 shows the third-generation Fermi computing architecture configured with 16 streaming multiprocessors, each with 32 CUDA processor cores, for a total of 512 cores.

- The GigaThread work scheduler distributes CUDA thread blocks to streaming multiprocessors with available capacity, dynamically balancing the computing workload across the GPU, and running multiple kernel tasks in parallel when appropriate.

- The multi-threaded streaming multiprocessors schedule and execute CUDA thread blocks and individual threads.

- Each streaming multiprocessor executes up to 1,536 concurrent threads to help cover long latency loads from DRAM memory. As each thread block completes executing its kernel program and releases its streaming multiprocessor resources, the work scheduler assigns a new thread block to that streaming multiprocessor.

- The PCIe host interface connects the GPU and its DRAM memory with the host CPU and system memory.

- The streaming multiprocessor threads access system memory via the PCIe interface, and CPU threads access GPU DRAM memory via PCIe.

- The GPU architecture balances its parallel computing power with parallel DRAM memory controllers designed for high memory bandwidth.

- Fermi introduces a parallel cached memory hierarchy for load, store, and atomic memory accesses by general applications.

- Each streaming multiprocessor has a first-level (L1) data cache, and the streaming multi- processors share a common 768-Kbyte unified second-level (L2) cache.

- The L2 cache connects with six 64-bit DRAM interfaces and the PCIe interface, which connects with the host CPU, system memory, and PCIe devices.

- It caches DRAM memory locations and system memory pages accessed via the PCIe interface.

- The unified L2 cache services load, store, atomic, and texture instruction requests from the streaming multiprocessors and requests from their L1 caches, and fills the streaming multiprocessor instruction caches and uniform data caches.

- (# Note: In GPU graphics, a texture is an image or data map applied to a 3D object’s surface. Texture instructions fetch and manipulate this data efficiently; in computing, “texture memory” can also be used as a read-only cached memory space optimized for certain access patterns #)

- Fermi implements a 40-bit physical address space that accesses GPU DRAM, CPU system memory, and PCIe device addresses. It provides a 40-bit virtual address space to each application context and maps it to the physical address space with translation lookaside buffers and page tables.

- Fermi ECC corrects single-bit errors and detects double-bit errors in the DRAM memory, GPU L2 cache, L1 caches, and streaming multiprocessor registers.

- The ECC lets us integrate thousands of GPUs in a system while maintaining a high mean time between failures (MTBF) for high-performance computing and super-computing systems.

- (# Note: Mean Time Between Failures (MTBF) is the average time a system or component operates before a failure occurs. Higher MTBF indicates more reliable hardware, crucial when thousands of GPUs work together in HPC systems #)

Streaming multiprocessor

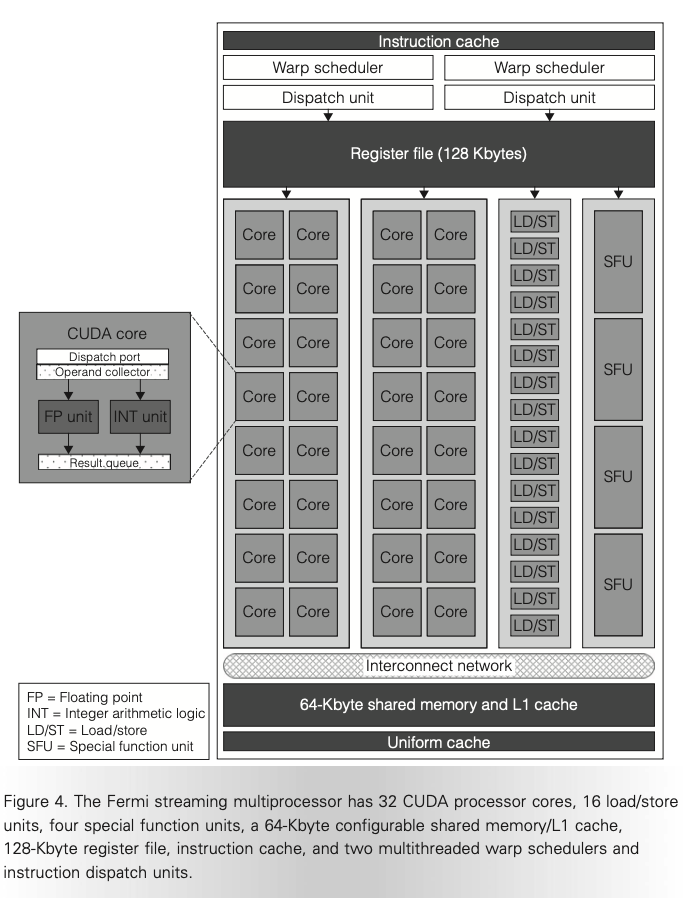

- The streaming multiprocessor implements zero-overhead multithreading and thread scheduling for up to 1,536 concurrent threads.

- (# Note: The SM supports zero-overhead multithreading by maintaining hardware context for each thread—registers, program counter, and state—so switching between 1,536 threads happens instantly without saving/restoring state. For example, if an SM has 32 cores and each warp has 32 threads, it can manage 48 warps concurrently: 32 × 48 = 1,536 threads #)

- To efficiently manage and execute this many individual threads, the multiprocessor employs the single-instruction multiple-thread (SIMT) architecture introduced in the first unified computing GPU.

- The SIMT instruction logic creates, manages, schedules, and executes concurrent threads in groups of 32 parallel threads called warps.

- A CUDA thread block comprises one or more warps. Each Fermi streaming multiprocessor has two warp schedulers and two dispatch units that each select a warp and issue an instruction from the warp to 16 CUDA cores, 16 load/store units, or four SFUs.

- (# Note: Imagine a classroom divided into smaller study groups (warps). The teacher (warp scheduler) chooses one group at a time and gives them an instruction. The students in that group then split into roles: some write (cores), some fetch books (load/store units), and some handle special tricky problems (SFUs). This shows how warps are managed and distributed to different execution resources #)

- Because warps execute independently, the streaming multiprocessor can issue two warp instructions to appropriate sets of CUDA cores, load/store units, and SFUs.

- To support C, C++, and standard single-thread programming languages, each streaming multiprocessor thread is independent, having its own private registers, condition codes and predicates, private per-thread memory and stack frame, instruction address, and thread execution state.

- The SIMT instructions control the execution of an individual thread, including arithmetic, memory access, and branching and control flow instructions.

- For efficiency, the SIMT multiprocessor issues an instruction to a warp of 32 independent parallel threads.

- The streaming multiprocessor realizes full efficiency and performance when all threads of a warp take the same execution path.

- If threads of a warp diverge at a data-dependent conditional branch, execution serializes for each branch path taken, and when all paths complete, the threads converge to the same execution path.

- Parallel thread execution (PTX) instructions describe the execution of a single thread in a parallel CUDA program.

- The PTX instructions focus on scalar (rather than vector) operations to match standard scalar programming languages.

- Each pipelined CUDA core executes a scalar floating point or integer instruction per clock for a thread. With 32 cores, the streaming multiprocessor can execute up to 32 arithmetic thread instructions per clock.

- The integer unit implements 32-bit precision for scalar integer operations, including 32-bit multiply and multiply-add operations, and efficiently supports 64-bit integer operations.

- The Fermi CUDA core floating-point unit implements the IEEE 754-2008 floating-point arithmetic standard for 32-bit single-including fused multiply-add (FMA) instructions.

- FMA computes D = A * B + C with no loss of precision by retaining full precision in the intermediate product and addition, then rounding the final sum to form the result.

- Using FMA enables fast division and square-root operations with exactly rounded results.

- Fermi raises the throughput of 64-bit double-precision operations to half that of single precision operations, a dramatic improvement over the T10 GPU.

- The SFUs execute 32-bit floating-point instructions for fast approximations of reciprocal, reciprocal square root, sin, cos, exp, and log functions.

- The streaming multiprocessor load/store units execute load, store, and atomic memory access instructions.

- A warp of 32 active threads presents 32 individual byte addresses, and the instruction accesses each memory address. The load/store units coalesce 32 individual thread accesses into a minimal number of memory block accesses.

- (# Note: Picture 32 people each ordering one item from a store. Instead of processing 32 separate trips, the store groups the orders into as few bulk deliveries as possible. This is how memory coalescing works — combining many small requests into fewer large, efficient ones #)

- (# Note: Think of 32 friends each mailing a letter to the same neighborhood. Instead of sending 32 separate mail trucks, the post office bundles the letters and sends them together in one truck. That’s memory coalescing — merging many nearby requests into one efficient transfer #)

- Fermi implements a unified thread address space that accesses the three separate parallel memory spaces of Figure 1: per-thread local, per-block shared, and global memory spaces.

- A unified load/store instruction can access any of the three memory spaces, steering the access to the correct memory, which enables general C and C++ pointer access anywhere.

- Fermi provides a terabyte 40-bit unified byte address space, and the load/store ISA supports 64-bit byte addressing for future growth. The ISA also provides 32-bit addressing instructions when the program can limit its accesses to the lower 4 Gbytes of address space.

- On-chip shared memory provides low-latency, high-bandwidth access to data shared by cooperating threads in the same CUDA thread block.

- Fast shared memory significantly boosts the performance of many applications having predictable regular addressing patterns, while reducing DRAM memory traffic.

- Fermi introduces a configurable-capacity L1 cache to aid unpredictable or irregular memory accesses, along with a configurable-capacity shared memory.

- Each streaming multiprocessor has 64 Kbytes of on-chip memory, configurable as 48 Kbytes of shared memory and 16 Kbytes of L1 cache, or as 16 Kbytes of shared memory and 48 Kbytes of L1 cache.

CPU+GPU co-processing

- Heterogeneous CPU+GPU co-processing systems evolved because the CPU and GPU have complementary attributes that allow applications to perform best using both types of processors.

- CUDA programs are coprocessing programs—serial portions execute on the CPU, while parallel portions execute on the GPU. Coprocessing optimizes total application performance.

- With coprocessing, we use the right core for the right job. We use a CPU core (optimized for low latency on a single thread) for a code’s serial portions, and we use GPU cores (optimized for aggregate throughput on a code’s parallel portions) for parallel portions of code.

- This approach gives more performance per unit area or power than either CPU or GPU cores alone.

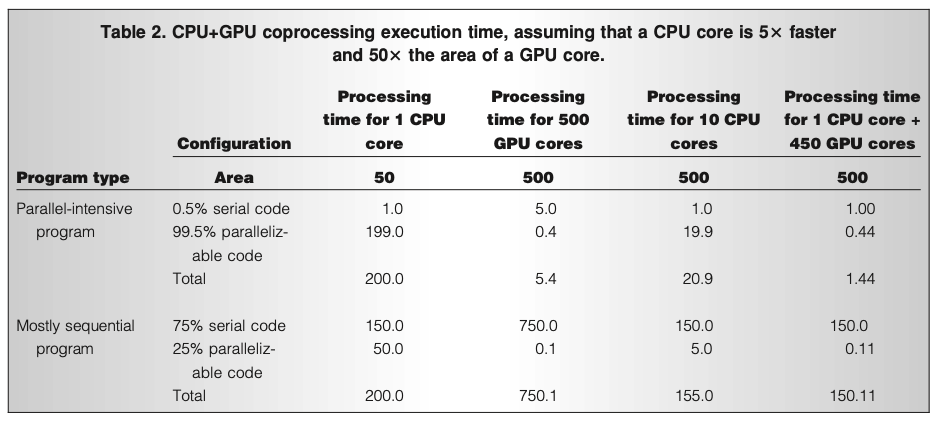

- The comparison in Table 2 illustrates the advantage of CPU+GPU coprocessing using Amdahl’s law.

- (# Note: Amdahl’s Law predicts the maximum speedup of a program using multiple processors, based on the fraction of the code that must run sequentially. Even if 90% of a program is parallelizable, the remaining 10% limits total speedup, showing why a CPU+GPU combination can outperform a pure GPU for mixed workloads #)

- The table compares the performance of four configurations:

- a system containing one latency-optimized (CPU) core,

- a system containing 500 throughput-optimized (GPU) cores,

- a system containing 10 CPU cores, and

- a coprocessing system that contains a single CPU core and 450 GPU cores.

- Table 2 assumes that a CPU core is 5 faster and 50 the area of a GPU core—numbers consistent with contemporary CPUs and GPUs. The coprocessing system devotes 10 percent of its area to the single CPU core and 90 percent of its area to the 450 GPU cores.

- The coprocessing architecture is the fastest on both programs.

- On the parallel-intensive program, the coprocessing architecture is slightly slower on the parallel portion than the pure GPU configuration (0.44 seconds versus 0.40 seconds) but more than makes up for this by running the tiny serial portion 5 faster (1 second versus5 seconds). The heterogeneous architecture has an advantage over the pure throughput- optimized configuration here because serial performance is important even for mostly parallel codes.

- Even on mostly sequential codes, it’s more efficient to run the code’s parallel portion on a throughput-optimized architecture.

- The coprocessing architecture provides the best performance across a wide range of the serial fraction because it uses the right core for each task.

- By using a latency- optimized CPU to run the code’s serial fraction, it gives the best possible performance on the serial fraction—which is important even for mostly parallel codes.

- By using throughput-optimized cores to run the code’s parallel portion, it gives near- optimal performance on the parallel fraction as well—which becomes increasingly important as codes become more parallel.

- It’s wasteful to use large, inefficient latency-optimized cores to run parallel code segments.

Application performance

- Many applications consist of a mixture of fundamentally serial control logic and inherently parallel computations.

- Furthermore, these parallel computations are frequently data-parallel in nature. This directly matches the CUDA coprocessing programming model, namely a sequential control thread capable of launching a series of parallel kernels.

- The use of parallel kernels launched from a sequential program also makes it relatively easy to parallelize an application’s individual components rather than rewrite the entire application.

- (# Note: Imagine renovating a house — instead of rebuilding the whole house from scratch, you can work on individual rooms in parallel (kitchen, bathroom, bedroom) while the overall house structure stays the same. Similarly, parallel kernels let you speed up parts of a program without rewriting the entire application #)

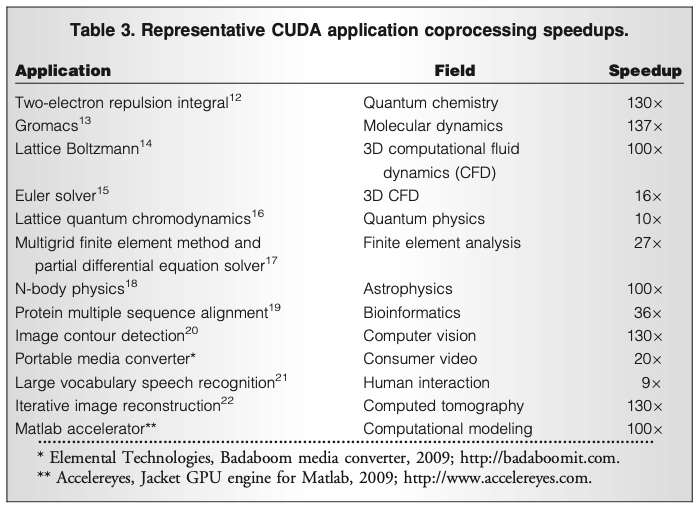

- Table 3 lists some representative applications along with the runtime speedups obtained for the whole application using CPU+GPU coprocessing over CPU alone, as measured by application developers.

- The speedups using GeForce 8800, Tesla T8, GeForce GTX 280, Tesla T10, and GeForce GTX 285 range from 9 to more than 130 , with the higher speedups reflecting applications where more of the work ran in parallel on the GPU.

- The lower speedups—while still quite attractive—represent applications that are limited by the code’s CPU portion, coprocessing overhead, or by divergence in the code’s GPU fraction.

- The speedups achieved on this diverse set of applications validate the programmability of GPUs—in addition to their performance.

- Applications with dense matrices, sparse matrices, and arbitrary pointer structures have all been successfully implemented in CUDA with impressive speedups. Similarly, applications with diverse control structures and significant data-dependent control, such as ray tracing, have achieved good performance in CUDA.

- Many real-world applications (such as interactive ray tracing) are composed of many different algorithms, each with varying degrees of parallelism.

- (# Note: Ray tracing is a graphics technique that simulates the path of light rays to produce realistic images with reflections, shadows, and refractions. Each ray’s calculation is independent, making it highly parallelizable on GPUs #)

- OptiX, our interactive ray-tracing software developer’s kit built in the CUDA architecture, provides a mechanism to control and schedule a wide variety of tasks on both the CPU and GPU.

- Some tasks are primarily serial and execute on the CPU, such as compilation, data structure management, and coordination with the operating system and user interaction.

- Other tasks, such as building an acceleration structure or updating animations, may run either on the CPU or the GPU depending on the choice of algorithms and the performance required.

- The net result is that CPUþGPU coprocessing enables fast, interactive ray tracing of complex scenes while you watch, which is an application that researchers previously considered too irregular for a GPU.

- GPU computing is at the tipping point. Single-threaded processor performance is no longer scaling at historic rates.

- Thus, we must use parallelism for the increased performance required to deliver more value to users.

- A GPU that’s optimized for throughput delivers parallel performance much more efficiently than a CPU that’s optimized for latency.

- A heterogeneous coprocessing architecture that combines a single latency-optimized core (a CPU) with many throughput-optimized cores (a GPU) performs better than either alternative alone.

- This is because it uses the right processor for the right job—the CPU for serial sections and critical paths and the GPU for the parallel sections.

- In high-performance computing, technical computing, and consumer media processing, CPU+GPU coprocessing has become the architecture of choice.

- GPU architecture will evolve to further increase the span of applications that it can efficiently address.

- GPU cores will not become CPUs—they will continue to be optimized for throughput, rather than latency.

- However, they will evolve to become more agile and better able to handle arbitrary control and data access patterns.

Minor details that are frequently misunderstood:

- A thread block always executes on one SM. Multiple smaller thread blocks may be present on one SM. There are more threads than execution units (“cuda cores”) on an SM which means not every thread gets to schedule a new instruction each clock cycle. That’s okay because threads often wait for memory or floating point operations that take multiple clock cycles to finish – Homer512

- Warp and block scheduling in CUDA - what exactly happens, and questions about eligible warps

- How many CUDA cores is used to process a CUDA warp?

- Confusion around no of CUDA Cores and the number of parallel threads