Reinforcement Learning - An Introductory Guide

Reinforcement Learning (RL) is a fascinating field that focuses on teaching agents how to make decisions based on their environment. The agent’s goal is to learn a strategy that maximizes a cumulative reward over time by interacting with its surroundings. But before we dive into the details of RL, let’s explore the fundamental concepts of decision-making and intelligence.

What is Decision Making?

At its core, decision-making is the process of choosing the best possible action from a set of alternatives. It lies at the heart of intelligence and is fundamental to building intelligent systems.

What is Intelligence?

Intelligence can be broadly defined as the ability to:

- Understand and process what’s happening around you.

- Make informed decisions.

- Reason and analyze complex situations.

In simpler terms, intelligence enables us to learn, adapt, and tackle new or challenging scenarios effectively.

Why Intelligence and How Does It Work?

A strong driving force for the evolution of intelligence is survival. For instance, early single-celled organisms relied on simple hunting strategies. Over time, the emergence of multicellular organisms, like C. elegans, marked a leap in complexity. They developed neurons, enabling coordination and more sophisticated strategies.

While the exact mechanisms of intelligence remain elusive, certain principles stand out:

- Neurons form the foundation of biological intelligence.

- Artificial neural networks via backpropagation, despite their inspiration from biology, cannot replicate human intelligence yet.

- Understanding the principles of intelligence is arguably more important than mimicking its mechanisms.

Six Lessons from Babies

Developmental psychology offers valuable insights into intelligence. Here are six key lessons we can learn from how babies develop:

- Be Multimodal: Babies combine sensory inputs (sight, sound, touch, etc.) to create a cohesive understanding of their environment.

- Be Incremental: Learning is gradual. Babies adapt as they encounter new information.

- Unlike i.i.d. data in supervised learning, real-world learning is sequential and non-i.i.d., posing unique challenges.

- RL algorithms often simulate i.i.d.-like scenarios to work effectively.

- Be Physical: Interaction with the environment is crucial. Babies learn by manipulating objects and observing the outcomes.

- Explore: Exploration is central to learning. Babies experiment with their surroundings to gather information and refine their actions.

- Be Social: Social interactions play a significant role. Babies learn by observing and imitating others.

- Learn a Language: Language serves as a symbolic framework to organize thoughts and retrieve information efficiently.

These principles are directly relevant to reinforcement learning (RL), where agents learn by interacting with and exploring their environment.

Takeaways Thus Far;

- Intelligence is fundamentally rooted in decision-making.

- Decision-making occurs at various levels, from low-level motor control to high-level reasoning and coordination.

- Algorithms for decision-making depend heavily on the specific task.

- Neuroscience, motor control, and cognitive psychology provide valuable insights for designing intelligent systems.

- Translating biological insights into computational systems remains challenging due to a lack of foundational understanding.

A Computational Lens on Decision Making

Decision-making can be viewed through the following computational frameworks:

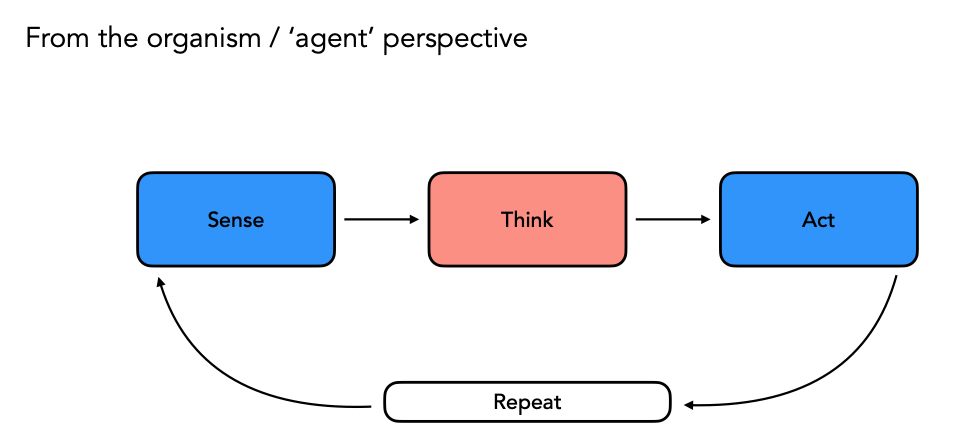

From an Agent’s Perspective:

- Sense

- Think

- Act

- Repeat

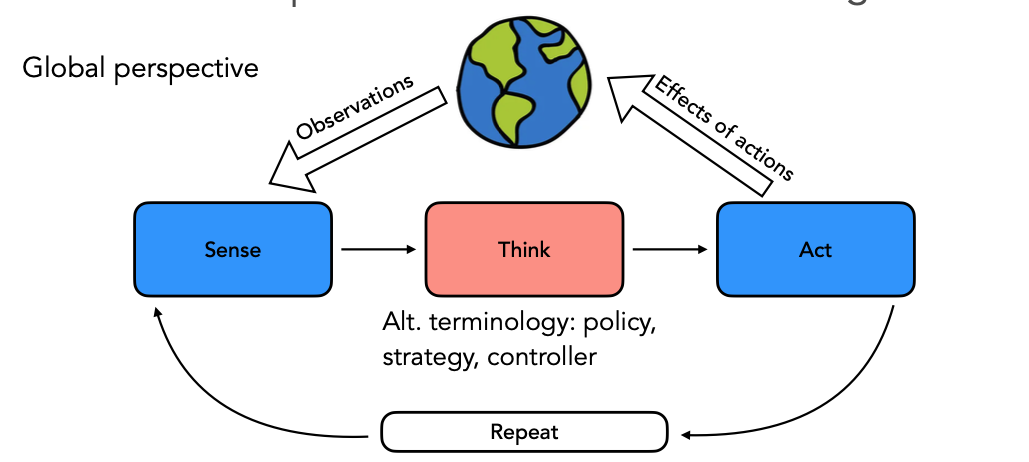

From a Global Perspective:

- Observations

- Sense → Think → Act

- Effects of Actions

- Repeat

Alternate terminologies for decision-making systems include policy, strategy, and controller.

Examples of Computational Decision Making

- Atari Games (Deep RL): Agents maximize scores by analyzing game frames and selecting actions accordingly.

- Google Robots: Robots perform complex tasks using intricate joint control mechanisms.

- Go: RL has enabled agents to outperform humans in this game with a vast decision space.

- Dota 2: RL systems have been trained to defeat top human players by optimizing strategies in a dynamic environment. Though this success is currently limited to controlled and restricted settings.

Reinforcement learning provides a robust framework to model decision-making by teaching agents to optimize actions based on rewards.

The Rewards Mechanism and Sequential Decision Making

In RL, decision-making unfolds over time through a sequence of observations, actions, and rewards. This sequence can be represented as:

\[(o_1, a_1, r_1) \to (o_2, a_2, r_2) \to \dots \to (o_n, a_n, r_n)\]Where:

- \(o_t\): Observation at time \(t\),

- \(a_t\): Action taken at time \(t\),

- \(r_t\): Reward received after taking action \(a_t\).

The objective is to maximize the cumulative reward:

\[\max_{a_1, a_2, \dots, a_{T-1}} \sum_{t=1}^{T} r_t\]The key challenge is determining the optimal actions \(a_t\) at each time step to achieve the maximum long-term reward. This leads to the concept of policy optimization.

Planning and World Models

Another approach to decision-making is through planning, where the agent uses a model of the world to simulate the effects of its actions. This allows the agent to reason about potential future states and make decisions accordingly.

However, planning is limited in its applicability:

- It works well in discrete, well-defined environments like games.

- It struggles with complex, dynamic tasks like conversational AI or real-world robotics.

The Limits of Current Approaches

Despite their potential, RL and planning-based models face significant challenges:

- Real-world scenarios often lack the i.i.d. assumption that RL sometime relies on.

- Bridging the gap between controlled simulations and dynamic real-world environments remains a key hurdle.

A Note of Caution Amidst Progress

While reinforcement learning and computational decision-making have seen remarkable progress, it’s important to recognize the challenges that remain. This brings us to Moravec’s Paradox, a fascinating insight into the nature of artificial intelligence:

“It is comparatively easy to make computers exhibit adult-level performance on intelligence tests or play games like chess, yet it is extremely difficult to give them the skills of a one-year-old when it comes to perception and mobility.”

— Hans Moravec, 1988

Steven Pinker elaborated further:

“The main lesson of thirty-five years of AI research is that the hard problems are easy, and the easy problems are hard.”

— Steven Pinker, 1994

What this paradox highlights is that tasks humans find effortless—such as walking, recognizing faces, or interacting physically with the environment—require immense computational power and intricate modeling to replicate in machines. Conversely, tasks like playing chess, solving mathematical problems, or optimizing game strategies are relatively easier for computers.

This paradox underscores the fact that intelligence, especially in its perceptual and physical forms, is deeply rooted in evolutionary processes. The interplay of sensory data, motor control, and real-world adaptation—elements essential for robust intelligence—remains a significant challenge for machines.

In reinforcement learning, we see this reflected in the difficulty of training agents to generalize to unstructured, real-world environments. RL agents perform admirably in games like Atari or Go, but replicating even the basic capabilities of a human child—like balancing on uneven surfaces or adapting to novel stimuli—remains an open frontier.

Conclusion

Reinforcement learning provides a powerful framework for decision-making, allowing agents to learn from their interactions with the environment. By leveraging concepts such as reward mechanisms, policy optimization, and planning, we have achieved significant milestones in fields like gaming, robotics, and autonomous systems.

However, the journey toward creating truly intelligent systems is far from over. While computational models continue to evolve, there is a need for a deeper understanding of the principles of intelligence, both biological and artificial.

As we wrap up this introduction to reinforcement learning and decision-making, it’s clear that intelligence is fundamentally about making informed decisions. Whether through supervised learning, reinforcement learning, or planning, the goal remains the same: enabling machines to reason, adapt, and thrive in dynamic environments.

In the next post, we’ll dive deeper into decision-making in supervised learning and explore how it serves as the foundation for many modern AI systems. Stay tuned!